constantly under construction…

Datasets

Occluded Articulated Human Body Dataset

An annotated dataset for human body pose extraction and tracking under occlusions.

|

|

|

|

Human Body Pose Estimation

A model-based approach for markerless articulated full body pose extraction and tracking in RGB-D sequences. A cylinder-based model is employed to represent the human body. For each body part a set of hypotheses is generated and tracked over time by a Particle Filter. To evaluate each hypothesis, we employ a novel metric that considers the reprojected Top View of the corresponding body part. The latter, in conjunction with depth information, effectively copes with difficult and ambiguous cases, such as severe occlusions.

Selected publications:

Full-body Pose Tracking – the Top View Reprojection Approach. Sigalas M., Pateraki M., Trahanias P., 2015. IEEE Transaction on Pattern Analysis and Machine Intelligence. [doi]

Robust Articulated Upper Body Pose Tracking under Severe Occlusions. Sigalas M., Pateraki M. and Trahanias P., 2014. In Proc. of the IEEE/RSJ Intl. Conference on Intelligent Robots and Systems (IROS), 14-18 September, Chicago, USA. [doi] [pdf] [bib]

3D reconstruction and path-tracing global illumination and of the Macedonian Tomb of Philip II

Creation of photorealistic 3D models for Cultural Heritage based on a 3d modelling and simulation pipeline. The Macedonian Tomb of Philip II was 3D modeled based on architectural-archaeological plans, reconstructed and rendered.

Selected publications:

A 3D Modelling and Photorealistic Simulation Pipeline for 3D Heritage Edifices. Pateraki M., Papagiannakis, G. 2018. In: ERCIM News 113, Research and Innovation, April 2018. [link]

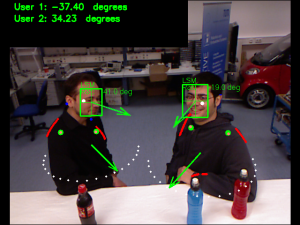

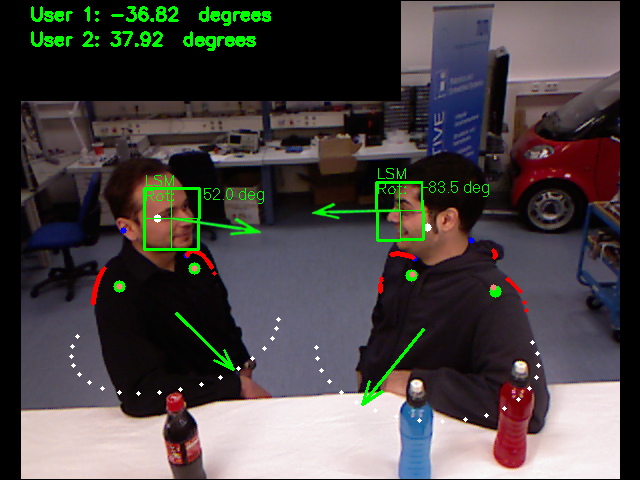

Estimation of attentive cues: torso pose and head pose

Torso and head pose, as forms of nonverbal communication, support the derivation people’s focus of attention, a key variable in the analysis of human behaviour in HRI paradigms encompassing social aspects. Towards this goal, we have developed a model-based approach for torso and head pose estimation to overcome key limitations in free-form interaction scenarios and issues of partial intra- and inter-person occlusions.

|

|

|

Selected publications:

Visual estimation of attentive cues in HRI: The case of torso and head pose. Sigalas M., Pateraki M. and Trahanias P., 2015. In Computer Vision Systems, Lecture Notes in Computer Science, Volume 9163, pp. 375-388. Proc. of the 10th Intl. Conference on Computer Vision Systems (ICVS), 6-9 July, Kopenhagen, Denmark. [doi] [bib]

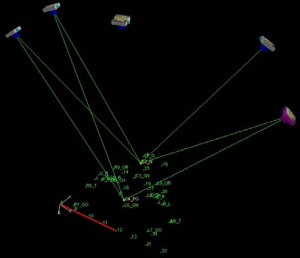

Multi-hypothesis 3D object pose tracking in image sequences

3D object pose tracking from monocular cameras. Data association is performed via a variant of the Iterative Closest Point algorithm, thus making it robust to noise and other artifacts. We re-initialise the hypothesis space based on the resulting re-projection error between hypothesized models and observed image objects. The use of multi-hypotheses and correspondences refinement, leads to a robust framework.

Selected publications:

Robust multi-hypothesis 3D object pose tracking. Chliveros G., Pateraki M., Trahanias P., 2013. In Proc. of the 9th Intl. Conference on Computer Vision Systems (ICVS), LNCS 7963, pp. 234-243, Springer-Verlag, St. Petersburg, Russia, July 16-18, 2013. [doi] [pdf] [bib]

A framework for 3D object identification and tracking. Chliveros G., Figueiredo R.P., Moreno P., Pateraki M., Bernardino A., Santos-Victor, J. and Trahanias P., 2014. In Proc. of the 9th International Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP2014), 5-7 January, Lisbon, Portugal. [doi] [pdf] [bib]

Application of dynamic distributional clauses for multi-hypothesis initialization in model-based object tracking. Nitti D., Chliveros G., Pateraki M., De Raedt L., Hourdakis E. and Trahanias P., 2014. In Proc. of the 9th International Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP2014), 5-7 January, Lisbon, Portugal. [doi] [pdf] [bib]

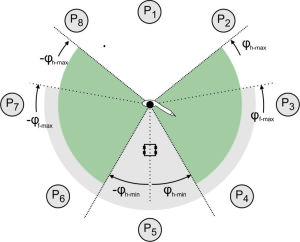

Visual estimation of pointed targets via fusion of face pose and hand orientation

A novel approach is formulated based on the Dempster–Shafer theory of evidence fusing information from two different input streams: head pose, estimated by visually tracking the off-plane rotations of the face, and hand pointing orientation and takes into account prior information about the location of possible pointed targets to decide about the pointed object. Detailed experimental results are presented that validate the effectiveness of the method in realistic application setups.

|

|

Selected publications:

Visual estimation of pointed targets for robot guidance via fusion of face pose and hand orientation. Pateraki M., Baltzakis H., Trahanias P., 2014. Computer Vision and Image Understanding. [doi] [bib]

Using Dempster’s rule of combination to robustly estimate pointed targets. Pateraki M., Baltzakis H., Trahanias P., 2012. In Proc. of the IEEE International Conference on Robotics and Automation (ICRA), 14-18 May, St. Paul, Minnesota, USA. [doi] [pdf] [bib]

Detection and tracking of human hands, faces and facial features

An integrated method for tracking hands and faces in image sequences. Hand and face regions are detected as solid blobs of skin-colored, foreground pixels and they are tracked over time using a propagated pixel hypotheses algorithm. A novel incremental classifier is further used to maintain and continuously update a belief about whether a tracked blob corresponds to a facial region, a left hand or a right hand. For the detection and tracking of specific facial features within each detected facial blob, an appearance-based detector and a feature-based tracker are combined. The proposed approach is mainly intended to support natural interaction with autonomously navigating robots and to provide input for the analysis of hand gestures and facial expressions that humans utilize while engaged in various conversational states with a robot.

Selected publications:

Visual tracking of hands, faces and facial features of multiple persons. Baltzakis H., Pateraki M., Trahanias P., 2012. Machine Vision and Applications. [doi] [pdf] [bib]

Tracking of facial features to support human-robot interaction. Pateraki M., Baltzakis H., Kondaxakis P., Thahanias P., 2009. In: Proc. IEEE International Conference on Robotics and Automation (ICRA), 12-17 May, Koebe, Japan. [doi] [pdf] [bib]

Terrestrial laser scanning for surface reconstruction of complex objects

Terrestrial laser scanners deliver a dense point-wise sampling of an object’s surface and for many applications a surface-like reconstruction is required. Traditional approaches use meshing algorithms to reconstruct and triangulate the surface represented by the points. Especially in cultural heritage, where complex objects with delicate structures are recorded in highly detailed scans, often long and tedious manual clean-up procedures are required to achieve satisfactory results. Our experience is summarized on meshing technology and explore an alternative approaches for surface reconstruction resulting in a speedy and fully automated procedure.

Selected publications:

From point samples to surfaces – on meshing and alternatives. Boehm J., Pateraki M., 2006. In: Proc. of the ISPRS Commission V Symposium Image Engineering and Vision Metrology, IAPRS, Vol. 37, Part B5. [pdf] [bib]

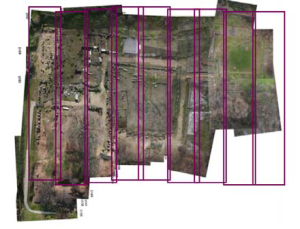

Photogrammetric documentation of archaeological sites

Different paradigms in photogrammetric digital documentation of archaeological sites using UAV and/or terrestrial images.

|

|

|

|

|

|

Selected publications:

Photogrammetric documentation and digital representation of the Macedonian Palace in Vergina – Aegae. Patias P., Paliadeli Ch., Georgoula O., Pateraki M., Stamnas A., Kyriakou N., 2007. In: Proc. XXI International CIPA Symposium, IAPRS, Vol. 16-5/C53 Part 3, pp. 562-566, 1-6 October, Athens, Greece. [pdf] [bib]

Image combination into large Virtual Images for fast 3D modelling of archaeological sites. Pateraki M., Baltsavias E., Patias P., 2002. In: Proc. of the ISPRS Commission V Symposium, IAPRS, Vol. 34, Part 5, pp. 440-445, 2-6 September, Corfu, Greece. [pdf] [bib]

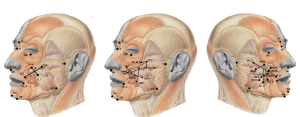

Photogrammetric techniques for medical applications

Use of photogrammetric techniques for craniofacial studies and scoliosis screening.

|

|

|

|

Selected publications:

33D digital photogrammetric reconstructions for scoliosis screening. Patias P., Stylianidis E., Pateraki M., Chrysanthou Y., Contozis C., Zavitsanakis Th., 2006. In: Proc. of the ISPRS Commission V Symposium Image Engineering and Vision Metrology, IAPRS, Vol. 37, Part B5. [pdf] [bib]

Photogrammetry vs. Anthroposcopy.Pateraki M., Fragkoulidou V., Stoltidou A., 2006. In: Proc. of the ISPRS Commission V Symposium Image Engineering and Vision Metrology, IAPRS, Vol. 37, Part B5. [pdf] [bib]

Adaptive multi-Image Matching algorithm for DSM generation from airborne linear array CCD data

ADS40, produced by Leica Geosystems GIS & Mapping (LGGM), offers on a single camera system the possibility to acquire both panchromatic and multispectral images in up to 10 channels (100% overlap), incorporating GPS and INS technology for direct sensor orientation. The radiometric and geometric characteristics of the sensor can reinforce matching in automated processes through the use of multiple channels with small perspective distortions, direct georeferencing and superior radiometric quality. The research focused on the development of a novel matching algorithm that makes use of a multi-image, multi-template approach with geometrical constraints exploiting quasi-epipolar lines and combining both feature- and area-based approaches, where edges with attributes, extracted points (edgels) and grid points are matched to generate a dense DSM.

Selected publications:

Adaptive multi-Image Matching algorithm for DSM generation from airborne linear array CCD data. Pateraki M., 2005. Ph.D. Thesis, Diss. ETH Zurich, Nr. 15915, Institute of Geodesy and Photogrammetry, Mitteilung No. 86. [pdf] [bib] [doi]

Experiences on automatic image matching for ADS40 pushbroom sensor data. Pateraki M., Baltsavias E., Recke U., 2004. In: Proc. of the ISPRS Congress, IAPRS, Vol. 35, Part B2, pp. 402-407, July, Istanbul. [pdf] [bib]

Analysis of a DSM generation algorithm for the ADS40 Airborne Pushbroom Sensor. Pateraki M., Baltsavias, E., 2003. In: A. Gruen, H. Kahmen (Eds.), Proc. 6th Conference on Optical 3D Measurement Techniques, Vol. 1, published by IGP, ETHZ, Zurich, pp. 48–54. [pdf] [bib]

Surface discontinuity modelling via LSM and use of edges

Exploitation of edge information in the least squares approach for DSM generation and discontinuity modelling. LSM when used for single points that lie close or on edges does not suffice to model discontinuities, especially when patch size is large. To alleviate the above-mentioned problem, LSM is firstly extended to edgels using an approximation of the rotation between search image and template, computed from a signal analysis.

Secondly, the model is extended to edge features with topological attributes (edges and vertices with link information).

|

|

Selected publications:

Surface discontinuity modelling by LSM through patch adaptation and Use of edges. Pateraki M., Baltsavias E., 2004. In: Proc. of the ISPRS Congress, IAPRS, Vol. 35, Part B3, pp. 522-527, July, Istanbul. [pdf] [bib]