Sigalas M., Pateraki M., Trahanias P., 2015. Full-body Pose Tracking – the Top View Reprojection Approach. IEEE Transaction on Pattern Analysis and Machine Intelligence [doi].

Abstract:

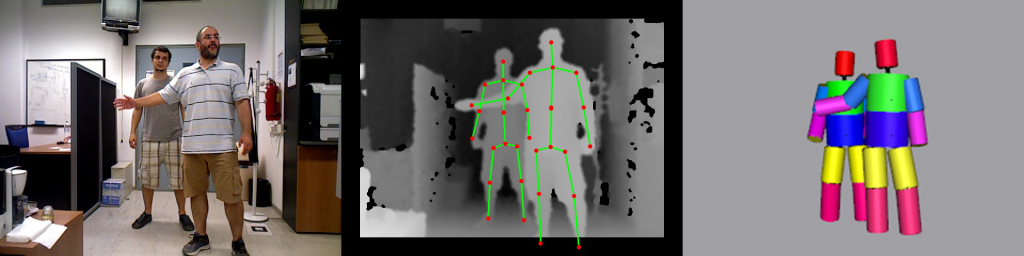

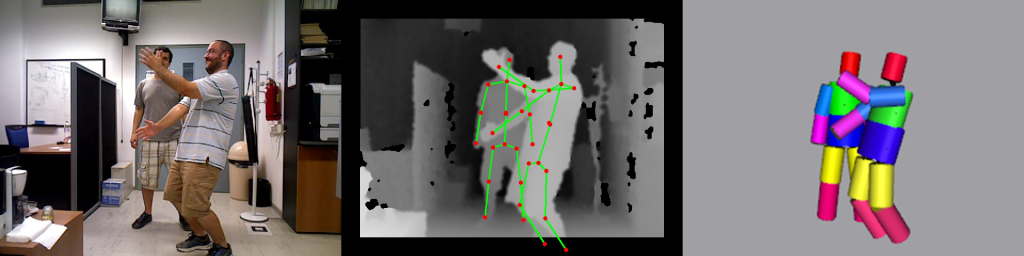

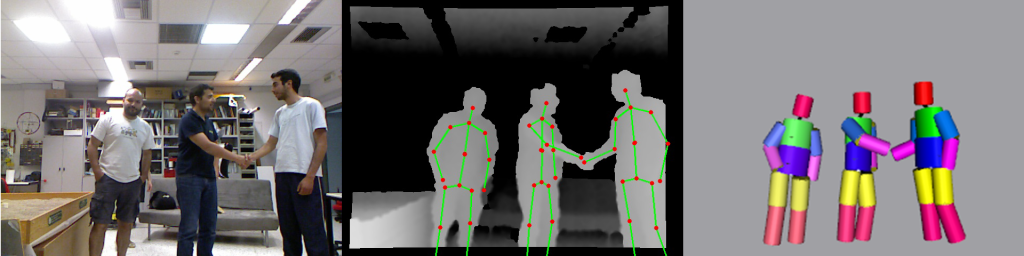

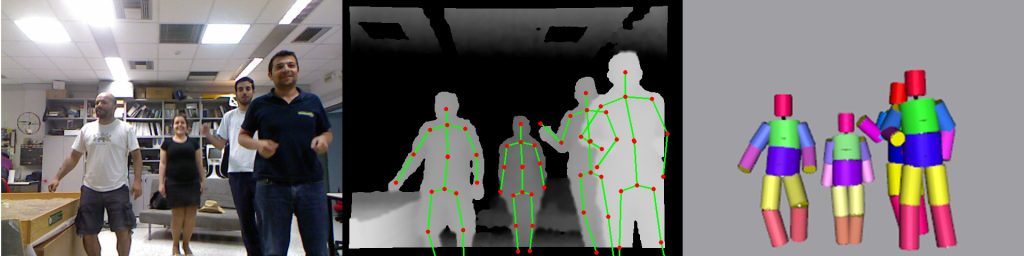

Recent introduction of low-cost depth cameras triggered a number of interesting works, pushing forward the state-of-the-art in human body pose extraction and tracking. However, despite the remarkable progress, many of the contemporary methods cope inadequately with complex scenarios, involving multiple interacting users, under the presence of severe inter- and intra-occlusions. In this work, we present a model-based approach for markerless articulated full body pose extraction and tracking in RGB-D sequences. A cylinder-based model is employed to represent the human body. For each body part a set of hypotheses is generated and tracked over time by a Particle Filter. To evaluate each hypothesis, we employ a novel metric that considers the reprojected Top View of the corresponding body part. The latter, in conjunction with depth information, effectively copes with difficult and ambiguous cases, such as severe occlusions. For evaluation purposes, we conducted several series of experiments using data from a public human action database, as well as own-collected data involving varying number of interacting users. The performance of the proposed method has been further compared against that of the Microsoft’s Kinect SDK and NiTETM using ground truth information. The results obtained attest for the effectiveness of our approach.

|

|

|

|

- Qualitative results with multiple users in the scene (video):

- Comparative evaluation of our methodology vs. Microsoft Kinect SDK skeletonization module using ground truth data (video):