Sigalas M., Pateraki M. and Trahanias P., 2014. Robust articulated upper body pose tracking under severe occlusionsIn Proc. of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 14-18 September, Chicago, USA. [doi] [pdf] [bib]

Abstract:

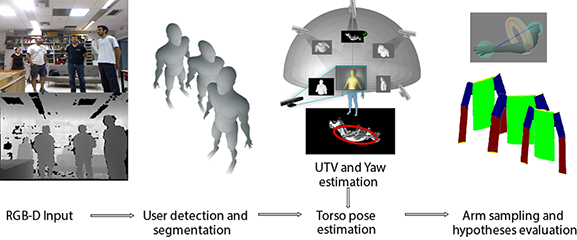

Articulated human body tracking is one of the most thoroughly examined, yet still challenging, tasks in Human Robot Interaction. The emergence of low-cost real-time depth cameras has greatly pushed forward the state of the art in the field. Nevertheless, the overall performance in complex, real life scenarios is an open-ended problem, mainly due to the high-dimensionality of the problem, the common presence of severe occlusions in the observed scene data, and errors in the segmentation and pose initialization processes. In this paper we propose a novel model-based approach for markerless pose detection and tracking of the articulated upper body of multiple users in RGB-D sequences. The main contribution of our work lies in the introduction and further development of a virtual User Top View, a hypothesized view aligned to the main torso axis of each user, to robustly estimate the 3D torso pose even under severe intra- and inter-personal occlusions, exempting at the same time the requirement of arbitrary initialization. The extracted 3D torso pose, along with a human arm kinematic model, gives rise to the generation of arms hypotheses, tracked via Particle Filters, and for which ordered rendering is used to detect possible occlusions and collisions. Experimental results in realistic scenarios, as well as comparative tests against the NiTE (TM) user generator middleware using ground truth data, validate the effectiveness of the proposed method.

|

- Supplementary material (video):