Sigalas M., Pateraki M., Oikonomidis I., Trahanias P., 2013. Robust Model-Based 3D Torso Pose Estimation in RGB-D Sequences. In Proc. of the 2nd IEEE Workshop on Dynamic Shape Capture and Analysis, held within Intl. Conference on Computer Vision (ICCV), 7 December, Sydney, Australia. [doi] [pdf] [bib]

Abstract:

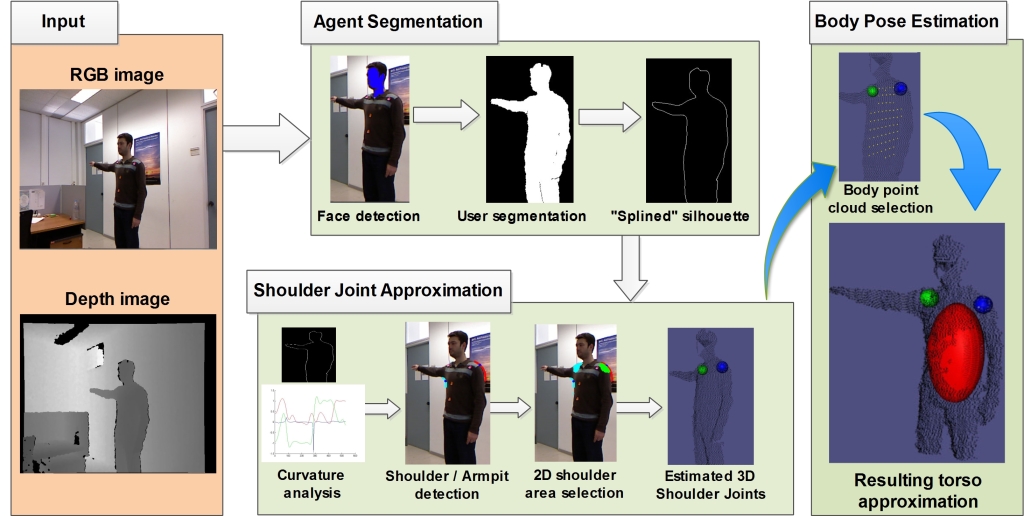

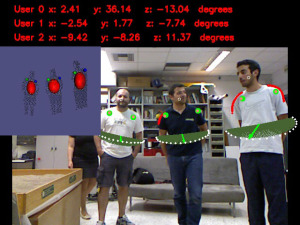

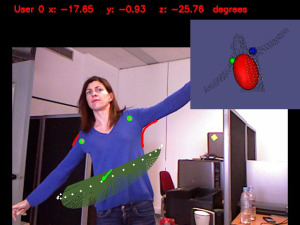

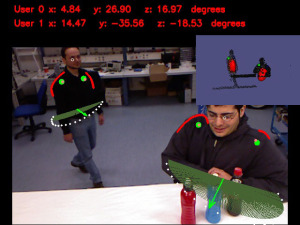

Free-form Human Robot Interaction (HRI) in naturalistic environments remains a challenging computer vision task. In this context, the extraction of human-body pose information is of utmost importance. Although the emergence of real-time depth cameras greatly facilitated this task, issues which limit the performance of existing methods in relevant HRI applications still exist. Applicability of current state-of-the art approaches is constrained by their inherent requirement of an initialization phase prior to deriving body pose information, which in complex, realistic scenarios, is often hard, if not impossible. In this work we present a data-driven model-based method for 3D torso pose estimation from RGB-D image sequences, eliminating the requirement of an initialization phase. The detected face of the user steers the initiation of shoulder areas hypotheses, based on illumination, scale and pose invariant features on the RGB silhouette. Depth point cloud information is subsequently utilized to approximate the shoulder joints and model the human torso based on a set of 3D geometric primitives and the estimation of the 3D torso pose is derived via a global optimization scheme. Experimental results in various environments, as well as using ground truth data and comparing to OpenNI User generator middleware results, validate the effectiveness of the proposed method.

|

||

|

|

|